The Ways of Counting--- Essay by Philip Emeagwali

HUMAN COMPUTING

"Can you do addition?" the White queen asked. "What's one and

one and one and one and one and one and one and one and one and

one?" --- Lewis Carroll,

Alice Through the Looking-Glass.

The word "computer" is often thought to have been coined with the advent of the first electronic computer in 1946. Actually, according to Webster's dictionary, the earliest recorded use of "computer" came exactly 300 years earlier, in 1646. From the 17th century until the turn of the 20th century, the term was used to describe people whose jobs involved calculating. In his 1834 book Memoirs of John Napier of Merchiston, Mark Napier wrote:"Many a man passes for a great mathematician because he is a huge computer. Hutton and Maseres were great calculators rather than great mathematicians. When their pages were full of figures and symbols, they were happy. [John] Napier alone, of all philosophers in all ages, made it the grand object of his life to obtain the power of calculation without its prolixity."

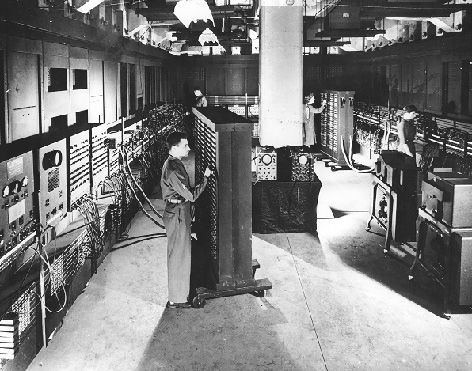

Human computers have both strengths and weaknesses. We humans have great powers of reasoning. Most of the things we do come from knowledge that we have developed culturally or that we have learned as so-called common-sense rules. We also have great tolerance for ambiguity. Confronted with a sentence that has several possible interpretations, humans can choose the correct interpretation for the given context. Humans also have the capacity of categorization, such as identifying and classifying birds, understanding regional variations in accented speech and so on. At the same time, we are unable to perform more than one calculation at a time (sequential computing). We compute at a very slow rate, and with a very small memory. Even worse, our limited memory sometimes causes us to forget intermediate results and thus fail to reach solutions. We need to write down many intermediate results and can use only simple procedures to solve mathematical problems. Since the human computer preceded the electronic computer, and since humans developed the electronic computer, we created it in our own image. This means that early electronic reflect our limitations. Just as the human computer has only one brain, so the early electronic computers had only one processor. Since our brain can perform only one calculation at a time, most computers were built to perform only one calculation at a time. The world's first large-scale general-purpose electronic computer, the ENIAC (Electronic Numerical Integrator And Computer), was designed with only one processor.

One of the primary problems that motivated the design of ENIAC was to calculate the trajectory of ballistic missiles at the U. S. Army's Ballistic Research Laboratory in Aberdeen, Maryland. Before the invention of ENIAC, Army engineers used 176 human computers to perform the calculations needed to produce firing and bombing tables for gunnery officers. Such calculations must be sequential, and therefore they mapped well onto the early electronic computers and their sequential processing. Another example of a technology matched to the human image is robotics. Early robots tended to have arms and/or legs and one centralized brain. This design has shifted recently to one in which robots now have a network of small, simple brains (or processors), each devoted to a single job such as lifting an arm. As mathematical reasoning progressed, however, it became apparent that sophisticated mathematical techniques suited for electronic computing diverged from those suited for the human brain. For example, the 10-based decimal system has proven to be the best for human computers, while the binary system based on the number 2, although too awkward for humans, is a natural match for electronic computers, where strings of bits with values of either 0 or 1 are used to store data.

COMPUTATION-INTENSIVE PROBLEMS"`Firstly, I would like to move this pile from here to there,' he explained, pointing to an enormous mound of fine sand,`but I'm afraid that all I have is this tiny tweezers.' And he gave them to Milo, who immediately began transporting one grain at a time." Norton Juster, The Phantom Tollbooth. As mathematics progressed, the tasks undertaken by human computers grew increasingly complex. By the late 19th century, certain calculations were recognized as outstripping the calculating capacity of hundreds of human clerks. The 1880 United States census posed one of the biggest computational challenges of that time. To analyze the 1880 census results, the U.S. government employed an army of clerks (called "computers") for seven years. The population changed significantly during those seven years, however, rendering the results out of date before they were published. More timely results could have been obtained by employing even more clerks, but that would have been too costly.

WORKING IN PARALLELThe human computers worked independently and simultaneously on the 1880 census data to produce numerous and voluminous sets of tables. In the field of computation their method is termed "working in parallel." It was the best technique known at that time, but since the results were still unacceptably slow in coming, the Census Bureau held a competition to find a more effective way to count the population. This competition led to the invention of Hollerith's Tabulating Machine, which was subsequently used to compute the U.S. population more accurately in 1890.

A DRAG STRIP FOR COMPUTERSThe century-old idea of holding a competition to solve computation-intensive problems was revived in 1986 by the Institute of Electrical and Electronics Engineers Computer Society with its annual Gordon Bell Prize Competition. As in 1890, competition has spurred advances in computing. The solution of important computation-intensive problems, like those required in weather-prediction, demand the calculation of trillions, quadrillions and even more calculations, whether by human or mechanical or electronic devices. Solving these problems one at a time or in a step-by-step fashion is analogous to moving a pile of sand one grain of sand at a time. It takes forever. Fortunately, many computation-intensive problems do not have to be performed one at a time. In fact, performing thousands and even millions of calculations at the same time is the only way to solve many computation-intensive problems quickly enough for the results to do some good. The latter approach is called massively parallel computing --- but with machines instead of the human computers that used this system in the 1880's.

NATURAL PARALLELISMResearchers are now learning that many problems in nature, human society, science and engineering are naturally parallel, that is, that they can be effectively solved by using mathematical methods that work in parallel. These problems share the common thread of having a large number of similar "elements" such as animals, people and molecules. The interactions between the elements are guided by simple rules but their overall behavior is complex.An individual ant is weak and slow, but ants have developed a method of foraging for food together with other ants. Their massively parallel approach is well described by the scientist and writer Lewis Thomas in The Lives of a Cell:

A solitary ant, afield, cannot be considered to have much of anything on his mind; indeed, with only a few neurons strung together by fibers, he can't be imagined to have a mind at all, much less a thought. He is more like a ganglion on legs. Four ants together, or 10, encircling a dead moth on a path, begin to look more like an idea. They fumble and shove, gradually moving the food toward the Hill, but as though by blind chance. It is only when you watch the dense mass of thousands of ants, crowded together around the Hill, blackening the ground, that you begin to see the whole beast, and now you observe it thinking, planning, calculating. It is an intelligence, a kind of live computer, with crawling bits for its wits. Massive parallelism can also be found in human society. We see it in wars, elections, economics and other endeavors characterized by the simple, independent and simultaneous actions of millions of individuals. This parallelism is so natural that people aren't even aware of it. Adam Smith described it in The Wealth of Nations:

Every individual endeavors to employ his capital so that its produce may be of greatest value. He generally neither intends to promote the public interest, nor knows how much he is promoting it. He intends only his own security, only his own gain. And he is in this led by an invisible hand to promote an end which was no part of his intention. By pursuing his own interest he frequently promotes that of society more effectually than when he really intends to promote it. In science and engineering the common thread that makes many problems naturally parallel is that they are governed by a small core of physical laws that are local and uniform. Local means that to know the temperature in say, Detroit, in the next few minutes, we only have to know what is happening right now in the nearby suburbs of Detroit. Uniform means that the laws governing weather-formation in Detroit are the same as in Timbuktu, Vladivostok or anywhere else. In weather forecasting, five uniform and local laws are used: conservation of mass; conservation of momentum; the conservation equation for moisture; the first law of thermodynamics; and the equation of state.

CHALLENGES IN COMPUTINGConstructing and running a computer model with the level of spatial resolution required for accurate computer-based weather forecasting takes quadrillions of arithmetical operations. Yet even this spatial resolution is too coarse to image tornadoes and other damaging local weather phenomena.To improve the spatial resolution so that it images local weather increases the amount of calculation required by 10s of times and consequently makes the original problem computationally intractable even for a modern supercomputer. The U.S. government has identified weather forecasting as one of 20 computation-intensive "grand challenges." Others include improving extraction of oil deposits, modeling the ocean, increasing computers' skill at understanding human speech and written language, mapping the human genome, and developing nuclear fusion. Many other problems in mathematics would take trillions of years to solve with the faster computers currently available.

EXPLOITING PARALLELISM: A PARADIGM SHIFTSeminal ideas in science usually occur in a process that has been described as a series of paradigm shifts, that is, a shift in the dominant patterns or examples which humans use in thinking or portraying "how things are." A well-known example of paradigm shift is the transition from the belief that the Earth is flat to the belief that it is round. With this paradigm shift, Magellan became aware that he could travel around the globe. If only one human computer had been used to analyze the 1880 census, the work would have taken forever to complete. Similarly, if only one computer were used to solve a computation-intensive problem of today, it would take forever to complete it. This paradigm of using one computer to solve a problem is called sequential computing. We are now living through a great paradigm shift in the field of computing, a shift from computing in the image of the human brain (sequential computing) to massively parallel computing, which employs thousands or more computers to solve one computation-intensive problem. Just as a paradigm shift in the belief about the shape of the Earth led to routine circumnavigations on the high seas, we would soon routinely solve important societal problems that are so computation-intensive that we had previously only dreamed of attempting them. Examining the roots of this paradigm shift will show why continuing efforts to solve computation-intensive problems with sequential computers will succeed only as well as the early aircraft did by attempting to fly by flapping bird-like wings. Since many computation-intensive problems are inherently parallel, it only makes sense to build and use a computer that exploits their inherent parallelism. Such a computer will give the best performance when it "looks" like the inherently parallel problems that it is trying to solve. The problems --- such as modeling atmospheric conditions, as discussed earlier --- arise from myriad simple interactions between thousands or millions of elements. As a result, the total calculations required carry us into the realm of "teraflops" computing.

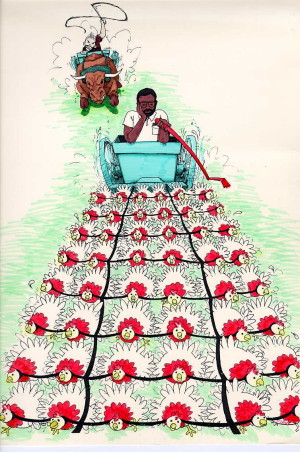

The cart pulled by the ox represents a conventional supercomputer. Intuition might lead one to think that the bull can outperform the cart pulled by a multitude of well-trained, harnessed chickens. But the coordination of many smaller units results in better performance. Similarly, a massively parallel computer is faster and more powerful than a conventional supercomputer.

TERAFLOPS --- HARDLY A DINOSAUR"Teraflops" may sound like the name of a dinosaur, but it does not describe extinct creatures, rather a level of computing yet to be achieved --- computing on the trillionfold level. The Holy Grail of large-scale computation is to attain a sustained teraflops rate in important problems. As Elizabeth Corcoran put it in January's Scientific American, "From the perspective of supercomputer designers, their decathlon is best described by three Ts--a trillion operations a second, a trillion bytes of memory and data communications rate of a trillion bytes per second."

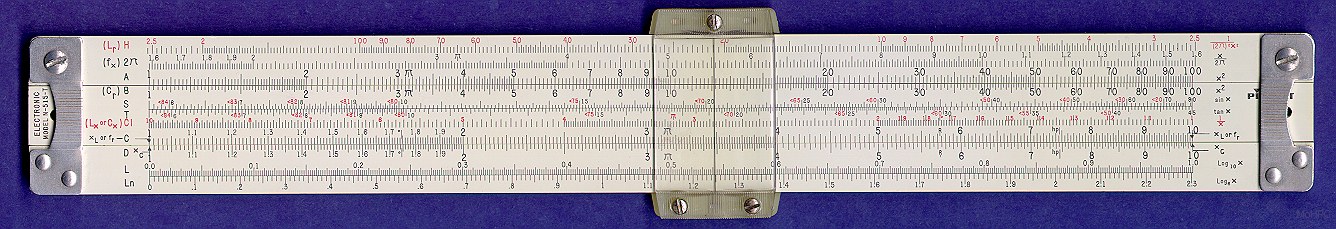

The effort to increase computing speed is an old one. It is the primary motivation that led to the invention of the abacus, logarithmic table, slide rule and electronic computer. However, these devices share one fundamental limitation: they are based on sequential computing, and thus are subject to the speed limits imposed by computing singly. As a result, some investigators now believe that massive parallelism is the only feasible approach that can be used to attain a teraflops rate of computation. Only massive parallelism can allow us to perform millions of calculations at once.

The most massively parallel computer built so far, known as the Connection Machine, uses 64,000 processors to perform 64,000 calculations at once. This approach makes it 64,000 times faster than using one processor. The Connection Machine has attained computational speeds in the range of five to 10 billion calculations per second. The next generation of the Connection Machine is expected to use one million processors to perform one million calculations at once. That could make it one million times faster than using a single processor. The target computational speed of this machine is one trillion calculations per second.

Massively parallel computers also have properties that make them far less costly than conventional computers. When the number of processors are doubled, the performance doubles, but the cost increases only by a few percentage points, since many components are shared by all the processors. A massively parallel computer also runs cooler than a conventional supercomputer. You can rest your hand on it while it runs; it doesn't require the $10,000-a-month energy bills needed to keep a mainframe supercomputer from overheating. We have come a long way from the individual with an abacus to today's massively parallel computers. The motivation along the path from then to now has been the existence of computation-intensive problems requiring computing resources of larger magnitude than those available.

Despite humanity's great progress in computing, the national grand challenges compiled by the U.S. government

identify computation-intensive problems that will inspire us to find even more

new ways of computing. In five years, we should be computing at the teraflops level. It will be fascinating to

see what achievements and new challenges will come after that.

CAPTIONS OF MISSING PHOTOS

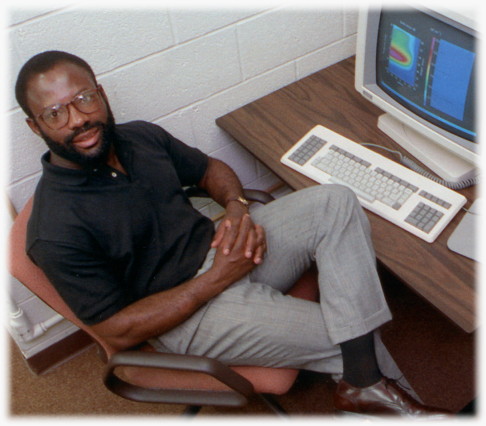

The author, Philip Emeagwali, programmed the Connection Machine to perform the world's fastest computation of 3.1 billion (3,100,000,000) calculations per second in 1989.

Please visit http://emeagwali.com

for more technology essays by Philip Emeagwali | ||